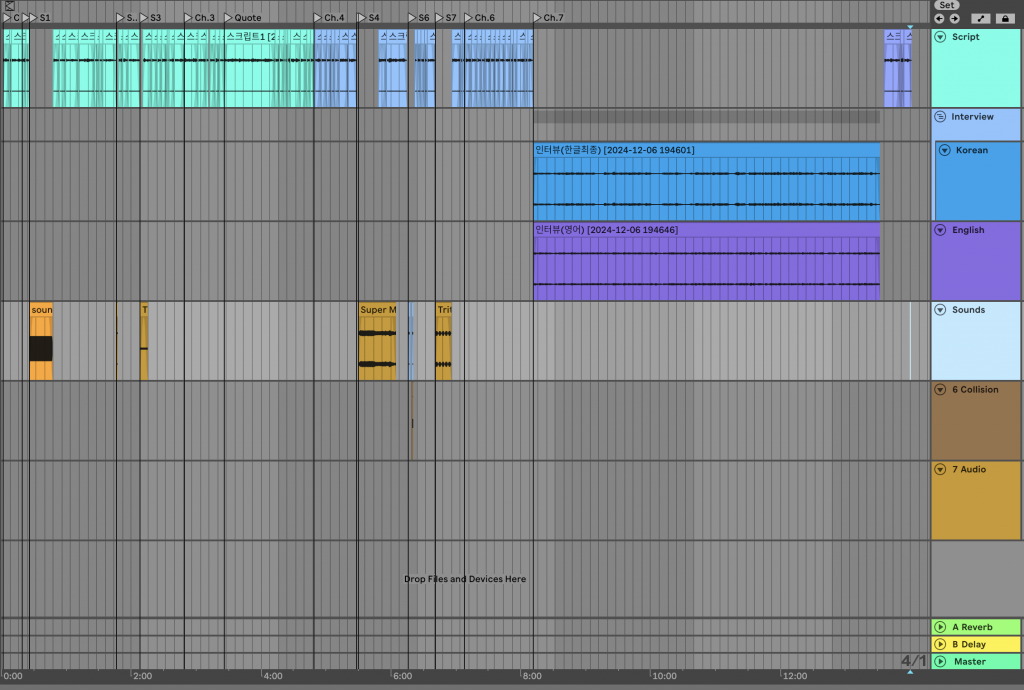

Today I will create, extract, and place music or sounds for our audio paper. First, I edited the scripts I had recorded so that they flowed together smoothly, and I created locators for what music should go in between them.

The music I needed was this

1. A tone gradually increasing from 50Hz to 20,000Hz

2. An imitation of an elephant’s death cry

3. A high-frequency tone gradually increasing in volume from the left speaker

4. Endless staircase music from Super Mario 64

5. Speech intermittently cutting out

6. Same speech with background noise masking the gaps

7. Tritone Paradox example

8. News audio as perceived by cochlear implant users

9. Processed music / Original music

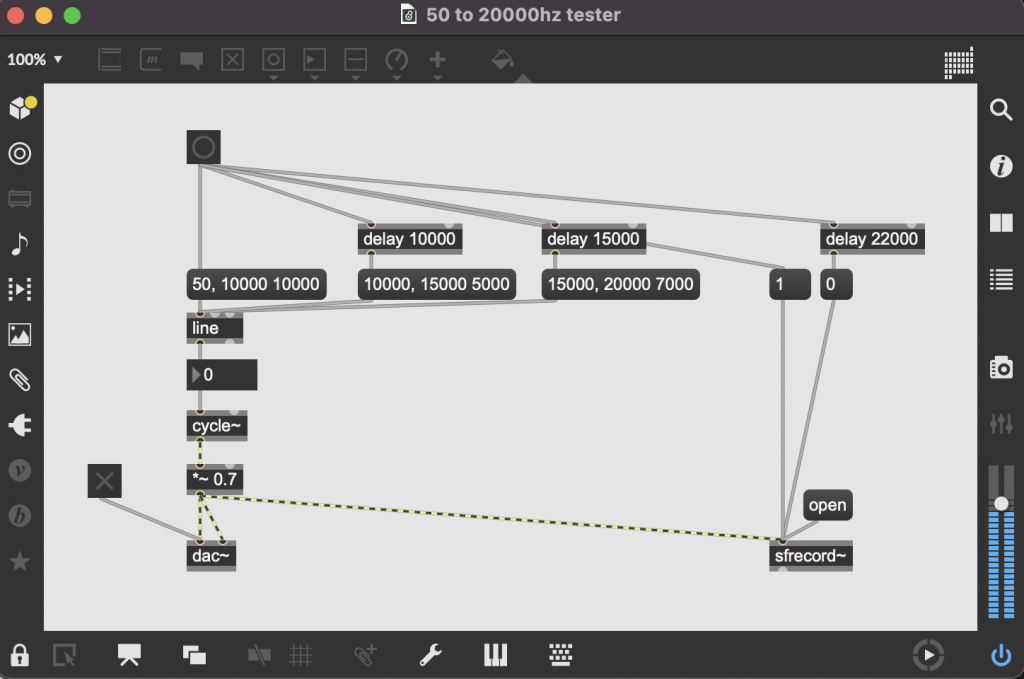

The first example was created using Max/MSP. Initially, I used line objects and cycle~ to create a sound source with a gradual increase in frequency. However, since my audio paper was already well over the 10 minute time limit, I needed to use the time as efficiently as possible, so I set it to go from 50hz to 15000hz, which is what most people can hear, in a relatively short amount of time, and then left it a little longer between 15000 and 20000hz, where you can see the differences between people.

For the second one, I recorded directly into the microphone, and it sounded pretty ridiculous, but it didn’t really matter, so I just went with it.

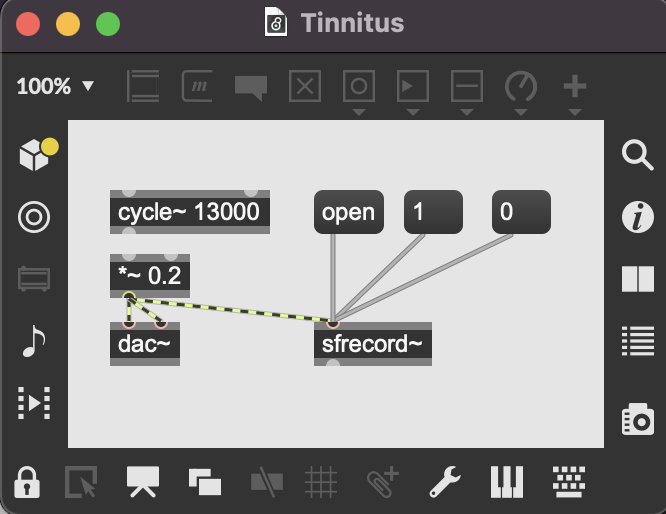

For the third, I did the same thing with Max/MSP, generating a 13000hz sine wave and then panning it within Ableton Live to make it only audible on the left side, as tinnitus is most often heard in one ear.

For the fourth one, I didn’t have any official sound sources available, so I used a sound from a YouTube playlist. Luckily, the sound quality wasn’t too bad.

For the fifth, I recorded my own voice and subtly cut off the end of each syllable to create a sound source. I then used Collison instrument to overlay a metallic sound on top of the cut, which I used to create the sixth sound source.

For the seventh one, there are quite a few sounds already made on YouTube, so I took a well-produced 30-second sound and thought about which one would be effectively recognised by people, so I chose four chord progressions and used them in the sound source.

The eighth one was originally in Korean, so I thought I would have to manipulate the sound source in English, as the effect I wanted to show was likely to be lost. So I found a video of bbc news on YouTube, extracted a short passage of sound, and used EQ, AMP, and a phasor effect to make it as close as possible to the sound that cochlear implant users hear. It’s not exactly the same, but it’s close enough that I’m happy with it.

In the case of the ninth song, the sound already existed in the recording of the interview, so I used it from the recording, and for the original song, I extracted the sound from a YouTube video and edited the same parts.

This is how I created all the music or sound effects and spaced them out. However, the total length of the audio paper is now about 15 minutes, which is much longer than the standard 10 minutes, so now I need to select the content of the script and audio that I don’t mind leaving out and shorten it.